The Evolution of Machine Learning and the Rise of Deep Learning

In the vast realm of data science, the evolution of machine learning has been remarkable, shaping the way we solve challenges and unravel insights from complex datasets. From humble beginnings, relying on human-defined classifiers, to the present era of massive data influx from various sources, the need for more sophisticated techniques became evident. This paved the way for deep learning, a revolutionary approach that automates feature extraction through intricate deep neural networks (DNNs).

In this educational mini-series, we will help you understand the dynamics of deep learning, its applications, and the pivotal role of GPU acceleration.

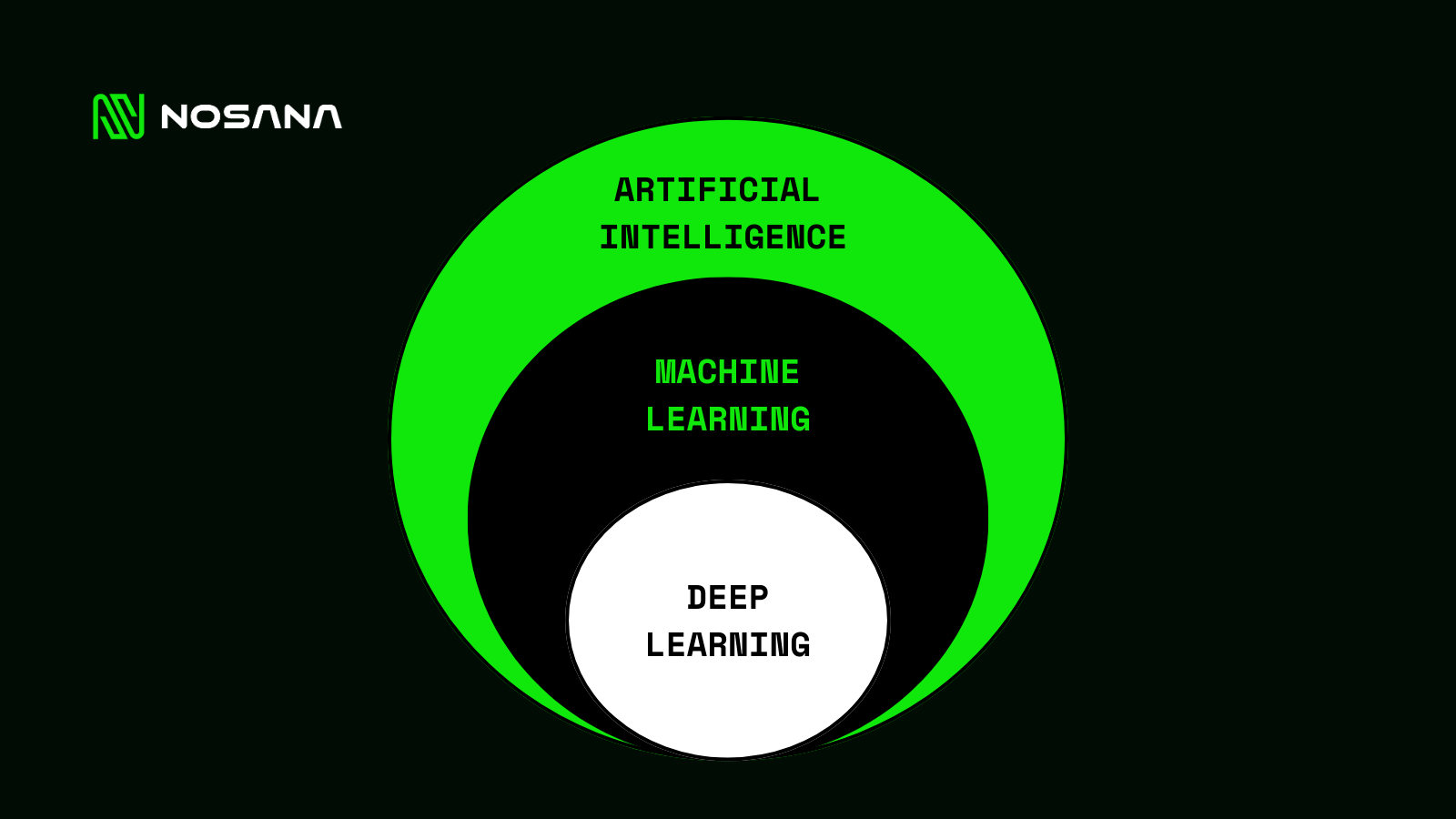

Machine Learning vs. Deep Learning:

Machine learning uses statistical techniques to construct a model from observed data. It generally relies on human-defined classifiers or feature extractors, which can be as simple as linear regression or the slightly more complicated bag of words technique that made some of the first email spam filters possible.

Evolution of Challenges: This approach was convenient 20-plus years ago when uninvited emails flooded inboxes. However, with the invention of smartphones, webcams, social media services, and various sensors, the challenge shifted to identifying the relevant features in the data and the correlations between them.

Deep Learning's Solution: Deep learning is a machine-learning technique that automates the creation of these feature extractors, a process we call feature engineering, using large amounts of data to train complex deep neural networks or DNNs. DNNs are capable of achieving human-level accuracy for many tasks but require huge datasets and tremendous computational power to train them.

Applications of GPU-Accelerated Data Science: Realizing Effectiveness and Efficiency

Let's explore how deep learning fits into the broader context of GPU-accelerated data science with a few examples:

Healthcare Innovations: In healthcare, accelerated data science can be used to better predict disease drivers with genomic medicine, improve health outcomes through the analysis of electronic medical records, or predict the best care or treatment for a wide range of health conditions.

Energy and Utility Optimization: Energy and utility companies can use accelerated data science to optimize distribution and smart grids, reducing outages with predictive maintenance.

Enterprise Insights: Enterprises large and small can utilize accelerated data science to analyze customer data for new product development, monitor the security of their IT systems and physical facilities, and implement reports that reveal deeper insights for business decision-makers.

The Rapid Rise of Deep Learning: Key Ingredients and Adoption Factors

Deep learning is stunningly effective across many domains, transforming the way computers achieve perceptual tasks such as computer vision, pattern detection, speech recognition, and behavior prediction.

Key Adoption Factors: Many people want to know why and how deep learning is being adopted so rapidly. Three key ingredients, or the initial conditions that made the big bang of modern AI possible, played a crucial role.

Big Data Revolution: The huge collections of examples from which computers can learn.

Parallel Algorithms: Researchers needed to develop parallel algorithms that allow data scientists to design and train deep neural networks to process mountains of data.

GPU Acceleration: The introduction of programmable GPU accelerators made it possible for researchers to implement their algorithms on a flexible platform and complete the massive amounts of computation required in a practical amount of time.

Open-Source Contributions: Fueling Collaboration and Progress

Early researchers published their algorithms and neural network models in research papers and scientific journals. Thankfully, they also released the software they created, packaged up in high-level open-source frameworks. These frameworks enabled others to build on top of their work instead of starting from scratch, and all the major deep learning frameworks support GPU acceleration because it just wasn't practical to do this computationally intense work without GPUs.

Diverse Architectures for Varied Tasks: Deep Neural Network Models

Different types of deep learning tasks require different model architectures. There are a variety of representative deep neural network models that have been simplified to highlight their differences.

Workhorse of Image Analysis: Convolutional Neural Networks (CNNs): CNNs are the workhorse for analyzing 2D or 3D images and video, including classification, prediction, and segmentation tasks.

Versatile Sequences: Recurrent Neural Networks (RNNs): RNNs have been shown to work well on many types of sequences, including natural language processing tasks such as sentiment analysis, speech recognition, machine translation, handwriting and gesture recognition, and time series forecasting. Realism in Generation: Generative Adversarial Networks (GANs): GANs are two deep neural networks competing with each other to generate images or other results that are incredibly realistic.

Automated Decision-Making: Reinforcement Learning: Reinforcement learning is used to automatically determine the ideal behavior or decision within a specific context.

Innovations in Model Development: Large Language Models (LLMs) and Transformer Architecture

Let's talk about one of the most innovative areas of model development today - Large Language Models, or LLMs. Advances in neural network architecture, most notably the transformer architecture, or transformer for short, enable LLMs by allowing them to train on enormous amounts of data. The transformer, introduced in 2017, has rapidly become a dominant building block of modern neural networks. This is a huge leap from previous architectures, which were generally limited to considering only the closest relationships within data or relatively short data sequences.

Design Considerations for Neural Network Models: Tailoring for Specific Tasks

Just keep in mind that the design of a neural network model is what makes it suitable for a particular task. For example, the best models for image classification are very different from the best models for speech recognition. The differences can include the number of layers, the number of nodes in each layer, the algorithms performed in each node, and how the nodes in one layer are connected to nodes in the next layer.

Ready-Made Models and the Need for Customization: Computation Intensity and GPU Acceleration

It's worth noting that there are many readily available pre-trained deep neural network models for image classification, object recognition, image segmentation, and several other tasks. However, it is often necessary to modify and retrain these models to achieve high levels of accuracy for a particular dataset or use case. The amount of computing needed to train and validate each model version is multiplied by the number of model variations to be evaluated. All of this processing requires a tremendous amount of computation, much of which can be performed in parallel, making deep learning an ideal workload for GPUs to accelerate.

Delving Deeper into Practical Applications

In the upcoming weeks, we'll delve deeper into the practical applications of deep learning, explore real-world examples, and unveil the synergy between deep learning and GPU acceleration.

Stay tuned for the next chapter, where we unravel the intricate fabric of deep learning training and inference.

About Nosana

Nosana is an open-source cloud computing marketplace dedicated to AI inference. Their mission is simple: make GPU computing more accessible at a fraction of the cost. The platform has two main goals: providing AI users with flexible GPU access and allowing GPU owners to earn passive income by renting out their hardware.

By offering affordable GPU power, Nosana enables AI users to train and deploy models faster without expensive hardware investments, all powered by the $NOS token. Access compute for a fraction of the cost or become a compute supplier at Nosana.io.

Website | Documentation | Twitter | Discord | Telegram | LinkedIn